Is AI Safety a Market Failure?

Market Forces are Underrated in AI Safety

Often, advocates will claim that AI Safety is a market failure, where AI labs do not have the appropriate incentives to invest in safety research that prevents catastrophic loss of control or misuse scenarios. [1]

While we agree that market forces might somewhat under-provide AI Safety, we argue that existing incentives faced by AI labs significantly mitigate the extent of this market failure. In this article, we will review two major arguments for AI Safety being a market failure and our arguments for why these arguments are overstated.

1. Externalities and AI Safety

The first major argument for AI Safety being a market failure is that AI risk is an externality and thus basic economic theory tells us that AI Safety will be under-provided. While this argument has some truth in it, this argument overlooks that AI labs internalize much of the potential harm associated with AI risk, reducing the severity of the externality.

Imagine a catastrophic AI failure causing significant human casualties. Such an incident would severely damage the reputation of the responsible AI lab, trigger massive financial liabilities, prompt public outrage, and could lead to extreme regulatory responses, potentially even a complete ban on advanced AI research.If these outcomes seem crazy, note that right now 50% of people support banning AI more powerful than GPT-4. After a disaster, drastic measures—such as permanent bans on advanced AI—could rapidly become politically acceptable and even demanded by the public

AI Safety research also directly benefits product quality. The main technical safety contribution to date—Reinforcement Learning from Human Feedback (RLHF)—was essential in developing useful AI assistants like GPT-3.5. Aligning AI systems with human preferences isn’t merely altruistic; it directly enhances product marketability and profitability.

That’s why AI labs are paying $300k-1M+ total compensation for scientists to work on alignment and AI safety. Such high compensation contrasts sharply with other severe market failures, such as factory farming, where similarly high salaries for harm reduction roles are rarely observed. We also don’t see high salaries for AI Safety in the government, indicating there is more optimization for AI Safety in the market, hardly something you normally see in cases of market failure.

2. Arms Races Are Compatible with Safety

A more advanced argument for AI Safety being a market failure is that the competitive dynamics with multiple firms lead to over-investment in capabilities and under-investment in safety. The argument goes that if e.g. Firm A decides to not add some useful but dangerous capability to their AI, then Firm B will just speed ahead while investing nothing in safety. Often this argument will explicitly appeal to the Prisoner’s Dilemma, a classic game theory example where everyone would be made better off by cooperating, but the equilibrium involves everyone defecting. The argument claims AI labs are in the same situation, where they all want to invest more in safety, but the only equilibrium is going as fast as possible with as little safety as possible.

We think this argument is substantially oversimplified for two main reasons.

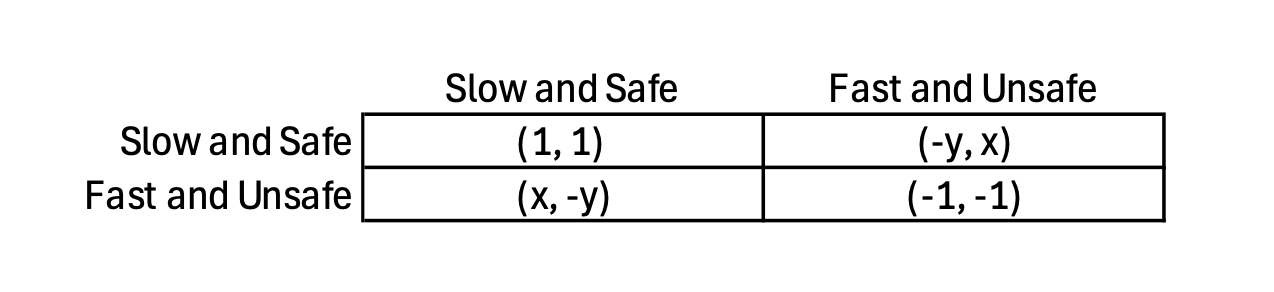

a. Defecting May Not be a Dominant Strategy in an AI Arms Race: In the prisoner’s dilemma, defecting is a dominant strategy. No matter what your opponent does, defecting leaves you better off. See the graph below for an illustration.

However, an AI arms race may be crucially different. Unlike the classic Prisoner’s Dilemma, defecting—pursuing more rapid capability advancement at the expense of safety—is not always beneficial. AI labs face substantial risks (reputation, regulation, liability) from unsafe behavior, meaning the potential cost of defection can outweigh short-term competitive advantages. Overall, defecting may or may not be in your interest depending on how much return there is to having slightly more capabilities and how much harm there is by having a slightly less safe model. Therefore, we could model the AI Arms race in the following way, where the variable x controls the benefit of defecting. If x <= 1, i.e. the returns to being faster is less than the cost of less safety, then both players playing the safe outcome is an equilibrium.

Moreover, in this simplified model there are only two options, safe and unsafe. In a more realistic model, there would be a continuum of actions and whatever margin you are currently at controls the benefit of defecting. Even if x starts being > 1, it will fall as you get close to guaranteeing a disaster, so even if cooperation on the fully safe outcome is not possible, partial cooperation on safety is.

b. AI Arms Races are not One Shot Games. The canonical model of the prisoner’s dilemma involves a single turn where players make a decision and never interact again. But AI Arms Races occur over time with many repeated decisions on how much to invest in capabilities and safety. In game theory parlance, an AI Arms Race is a repeated game. A very famous game theory result, the Folk Theorem, shows that you can get cooperation in the prisoner’s dilemma in repeated games under a wide variety of settings! Repeated interactions allow firms to build trust and punish defection through subsequent actions, significantly increasing the possibility of stable cooperation over time.

Of course, repeated games tend to have many equilibria, so perhaps the current market is not in this good cooperative equilibrium. But, this result shows that cooperation is not something that the market forecloses. Indeed, current examples exist where AI labs have responsible scaling policies and alignment research—indicating partial cooperation already occurs.

Conclusion:

We have argued that the claim of AI Safety as a severe market failure is overstated, as substantial incentives already exist for labs to prioritize safety [3]. Nevertheless, the importance of AI Safety remains paramount. Problems do not require a perfect market failure to warrant serious public attention and proactive policy solutions. The market may demand a solution, but humanity still needs to provide the talent to solve it! [4]

Footnotes:

[1] Throughout the article, we will use AI Safety to refer to investments that prevent direct danger from AI systems by loss of control or misuse. This is distinct from research that aims to prevent a concentration of power, optimal governance, etc.

[2] The cost of someone defecting against you is controlled by y. The value y should take in an AI arms race is somewhat unclear, but it doesn’t matter much for our analysis.

[3] We are also sympathetic to more advanced Econ-style arguments for underinvestment that focus on AI firms with heterogeneous beliefs about how much safety research is needed. We are working out a formal model of this.

[4] We are also somewhat sympathetic to the implication that other aspects of AI may be more neglected than Safety. For example, perhaps preventing a concentration of power after AGI is more neglected because no private actor has any incentive to prevent that.